Will.0.W1sp, 2005-2008

Concept/direction: Kirk Woolford

Sound: Carlos Guedes

Movement: Ailed Izurieta, Patrizia Penev, Marjolein Vogels

ABSTRACT

Will.0.W1sp is an interactive installation exploring our ability to recognise human motion without human form. It uses particle systems to create characters or “whisps” with their own drifting, flowing movement, but which also follow digitised human movements. The central point of the environment is a 2x6m curved screen allowing the whisps to be projected at human scale while giving them enough to space to move and avoid visitors through the use of a combination of video tracking and motion sensors. If visitors move quickly in the space, the particle flow becomes erratic. If visitor moves suddenly toward the whisps they explode. The installation system uses custom particle targets and funnels instead of traditional emitters. It performs realtime motion analysis both on the prerecorded motion capture sequences and the movement of the audience to determine how to route the particles across the scene. The motion vectors are simultaneously fed to an audio system using audio grains to create sound flowing in synch with the imagery. Will.0.w1sp invites visitors to chase after virtual, intangible characters which continually scatter and reform just beyond their reach.

INTRODUCTION

Will-o’-the-Whisp, Irrlicht, Candelas, nearly every culture has a name for the mysterious blue white lights seen drifting through marshes and meadows. Whether they are lights of trooping faeries, wandering souls, or glowing swamp gas, they all exhibit the same behavior. They dance ahead of people, but when approached, they vanish and reappear just out of reach. Will.0.w1sp creates new dances of these mysterious lights, but just as with the originals, when a viewer ventures too close, the lights scatter, spin, spiral then reform and continue the dance just beyond the viewer’s reach.

Will.0.W1sp is based on real-time particle systems moving dots like fireflies smoothly around an environent. The particles have their own drifting, flowing movement, but also follow the movements digitised human motions. They shift from one captured sequence to another – performing 30 seconds of one sequence, scattering, then reforming into 1 minute of another sequence by another dancer. In addition to generating the particle systems, the computer watches the positions of viewers around the installation. If an audience member comes too close to the screen, the movement either shifts to another part of the screen or scatters completely.

OVERVIEW

The human visual system is fine-tuned to recognise both movement and other human beings. However, the entire human perceptual process attempts to categorise sensations. Once sensations have been placed in their proper categories, most are ignored and only a select few pass into consciousness. This is why we can walk through a city and be only scarcely aware of the thousands of people we pass, but immediately recognise someone who looks or “walks like” a close friend.

Will.0 plays with human perception by giving visitors something that moves like a human being, but denies categorisation. It grabs and holds visitors attention at a very deep level. In order to trigger the parts of the visual system tuned to human movement, the movement driving the particles is captured from live dancers using motion capture techniques. While the installation is running, the system decides whether to smoothly flow from one motion sequence into another, make an abrupt change in movement, or to switch to pedestrian motions such as sitting, walking off screen, etc. These decisions are based on position and movement of observers in the space.

The choreography is arranged into series of movement sequences which flow through specific poses. It is a mix of shifting approaches and retreats as the system plays through its sequences and responds to the presence and movements of the audience.

SOUND

While the installation attempts to present human movement without human beings, it also pulls them into a sonic atmosphere somewhere between installation space and some space outdoor at night. The sound has underlying melodies punctuated by crickets, goat bells, and scruffing sounds from heavy creatures moving in the dark. All this is generated and mixed live by software watching the flow and positions of the particles in the space.

The sound software is a Max/MSP patch with custom plug-ins written by Carlos Guedes. Because it uses so much cpu-power to generate the sound, it is run on a separate computer. Particle motion data, overall tempo, x, y, and z offsets are all transmitted from the computer controlling the particles to the sound computer using Open Sound Control streams.

MOTION DATA

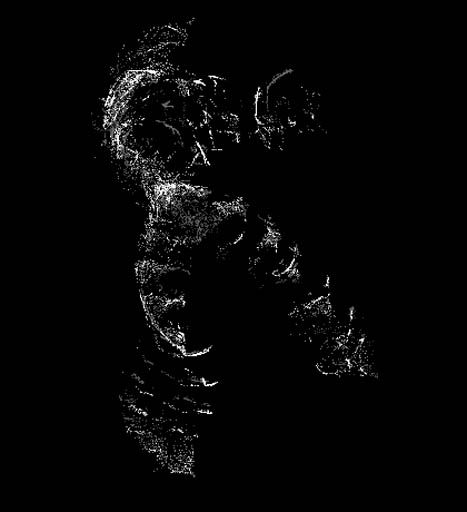

The installation uses a small a database of motion sequences as the base animation data for the particle dancers. Initial tests sequences were created with a Hypervision Reactor 3D motion capture system. However, the system used for these tests required a great deal of “data cleaning”, or manually correcting of motion data. Sometimes it was necessary to adjust 32 points for every frame of capture data. We decided this was just as time-consuming as manually rotoscpoping data, so we created an intelligent rotoscoping package called “Rotocope” shown in “Figure 1”. Rotocope allowed us to manually position markers over key frames in video-taped movement sequences. Rotocope

PRESENTATION

The project is presented on a custom designed screen. The screen is slightly larger taller than a human and curves slightly from center to the edges. This keeps the images at a human scale while completely filling the audience’s field of vision. At the same time, it allows movement at the edges to shift and disperse more quickly than movement in the center. The screen is much wider than standard high-def format (2.2m x 6.0m) to create more space for the image to shift and flow. It is driven by 2 synched video projectors.

INTERACTION

A close reading of the algorithms outlined in sections 4 and 5 will reveal that the entire character of the Will.0.w1sp system revolves around 2 simple values: the maximum velocity and maximum acceleration of the particles. As mentioned in section 3, increasing the max velocity of the particles allows them to track their motion targets more closely and the particles begin to take on the form of the original dancer. Increasing the acceleration, narrows the width of this dancer. At the same time, decreasing the velocity while increasing the acceleration causes the particles shoot off in nearly straight lines. In effect, it explodes the virtual dancer.

The Will.0.W1sp interaction system is a separate program which tracks the positions and actions of visitors, and decides how to modulate these two core variables. It also controls where on the 6m curved screen the dancer positions itself.

Inverse Interaction

When many visitors walk into an “interactive” environment, the first thing they do is to walk up to the screen and start waving their arms madly to see the piece “interact”. Will.0.W1sp responds the same as most humans or animals. It scatters and waits for the person to calm down and stay in one place for a minute. It uses inverse interaction. The installation uses interactive techniques not to drive itself, but to calm down the viewer. When the viewers realize the system is responding to them, they eventually extend the installation the same respect they would extend a live performer.

Tracking techniques

The first version of Will.0.w1sp used an overhead camera and image analysis software to track visitors. This worked as long as the lighting conditions could be controlled. However, it was unstable. When Will.0.w1sp was invited to represent Funcaion Telecom at ARCO’06 in Madrid. A robust tracking system had to be developed which could handle up to 50 people in front of the screen at once, and a continual flow of more than 2,000 people a day.

We replaced the overhead camera with an array of passive Infra-Red motion detectors connected to an Arduino[2] open source microcontroller system We developed a control system for this sensor array using the “Processing” [3] open source programming environment together with the processing “oscp5” Open Sound Control library developed by Andreas Schlegel. The tracking system records motion in 9 different zones and calculates max velocity, acceleration, and character position based on positions and overall motion of viewers. It uses its own timers to suddenly ramp up values and slowly return them to normal when it no longer sees any motion in any of its sensory zones.

CONCLUSION

The installation is very successful in its ability to generate dynamic images which walk the line between recognition as human and other. Viewers to the installation often feel they are in the space with another “live” entity which is not quite human. After initial playing around to see the particles scatter, they will often sit for an hour or more to watch them perform.

ACKNOWLEDGMENTS

Will.0.w1sp was funded by a grant from the Amsterdams Fonds voor de Kunst and supported by the Lancaster Institute for the Contemporary Arts, Lancaster University.

REFERENCES

[1] C. Guedes, “The m-objects: A small library for musical generation and musical tempo control from dance movement real time.” Proceedings of the International Computer Conference, International Computer Music Association, 2005, 794-797

[2] C. Guedes. “Extracting musically-relevant rhythmic information from dance movement by applying pitch-tracking techniques video signal.” Proceedings of the Sound and Music Computing Conference SMC06, Marseille, France, 2006, pp. 25-33

[3] http://www.arduino.cc/

[4] http://www.processing.org/